We’re in the thick of the AI chatbot age, and man did it swamp us with the speed of… an AI chatbot delivering on a well-rendered prompt.

ChatGPT was only a few months into broad adoption when OpenAI launched its more creative, more collaborative kid sister, GPT-4. Now here we are getting acquainted with Google’s AI chatbot competitor, Bard, which opened its user waitlist in late March of 2023.

Anyone looking to level up and leverage AI is feverishly following the developments, shaping up prompt skills, testing, testing, testing, and keeping an eye on interesting launches. Google’s Bard launch has, naturally, been among the most anticipated.

So, how does Bard stack up against ChatGPT?

Comparing Bard and ChatGPT

Many highly technical AI experts are busily breaking down the differences between the large language models that power Bard and ChatGPT—Google’s Language Model for Dialogue Applications (or LaMDA) and OpenAI’s Generative Pre-Trained Transformer (GPT).

Rather than focusing on inner workings here, let’s explore their output.

Both tools are generative AI chatbots programmed to take in conversational prompts and deliver quick, comprehensive responses. We asked, they delivered.

As with all things AI, the best way to understand strengths and stumbling blocks is to play. An easy experiment: How do Bard and ChatGPT tackle the same prompt?

AI prompt parameters

Chatbot results depend (significantly) on your prompt. Give a chatbot a bland and basic prompt, it’ll deliver a bland and basic result. The more specificity and direction you give your AI tool, the better your results get.

“Better” or “good” are subjective terms, but at least from our perspective, the goal isn’t to directly copy and paste AI-generated copy. AI can spark ideas, help you organize and focus your research, gently move you out of blank-page paralysis. “Good” results make creativity and productivity flow.

With those parameters in mind, we can make a decent AI-pples to AI-pples comparison.

The chatbots’ results

Here’s an example prompt, given word-for-word to ChatGPT and Bard:

You’re a local dentist opening a new practice in Nashville, Tennessee. Sketch out unique, creative content ideas you could develop in the coming months for your website and social media.

You’ll be speaking to an audience of current and potential patients. You want them to view you as personable and knowledgeable, and you want to provide them with valuable information that relates to your business. You want to inspire trust and make them think about you when they need to see a dentist.

Don’t suggest obvious ideas. Think creatively and specifically—sketch out concepts and offer suggestions on how to flesh them out.

The AI chatbots delivered these results:

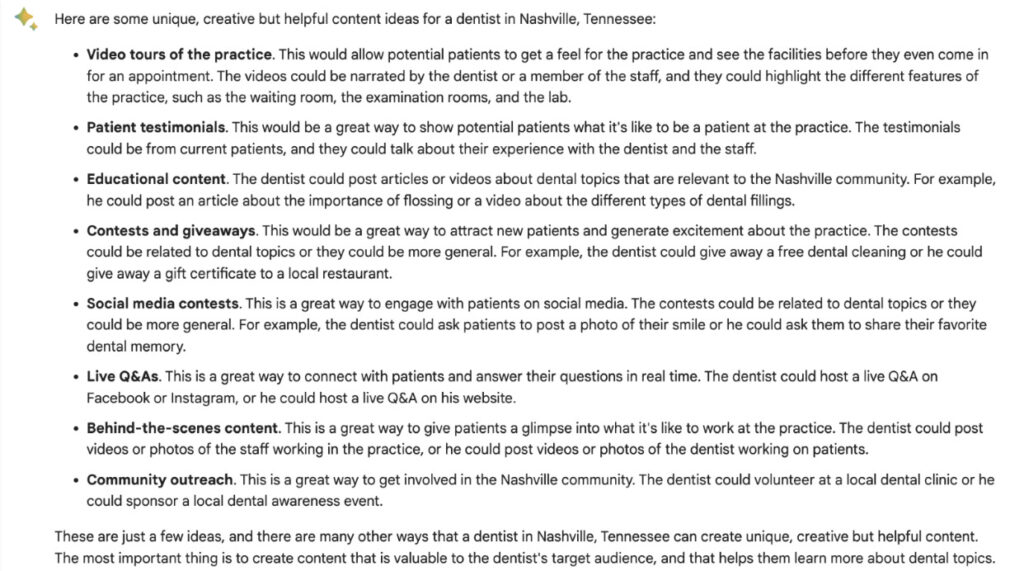

BARD

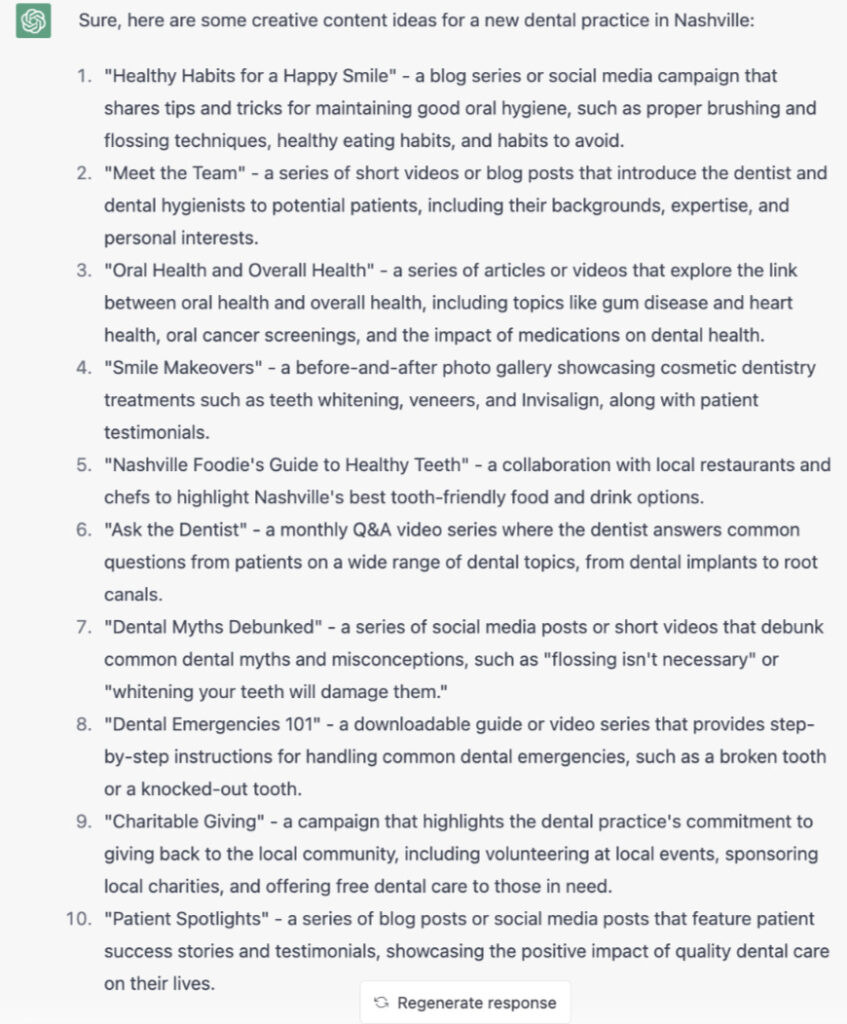

CHATGPT

Initial impressions

What sparks for you, as you read through these results?

For us, Bard offers basic, robotic suggestions. “Do testimonials.” “Host a Live.” Not much to sink your teeth into conceptually; not much to get your creative wheels spinning.

ChatGPT picked up the “creative” request, offering specific suggestions and detailed breakdowns of how you might approach iterating on those ideas.

You can really see the depth of ChatGPT’s research in the “Nashville Foodie’s Guide” suggestion—we didn’t tell the chatbot about Nashville’s growing place as a food-culture hub, but it picked that up and riffed on it. That adds relevance that personalizes. A dentist in Clayton, Delaware, wouldn’t necessarily lean into foodie content. A Nashville one could, and probably should.

To use these tools more effectively, we’d course correct, explain, reshape and refine. With time and tweaks, we’d get closer and closer to a list worth fleshing out. That process—and how these two tools would fare—is an experiment in and of itself.

To start, Bard looks like it has some catching up to do. And this is Bard pitted against free ChatGPT, not the newer, more robust ChatGPT-4. It’ll be interesting to see how that gap closes (or widens?) as both tools continue to learn.

What’s your take?

Have you been experimenting with AI? What are your likes, dislikes, preferences and impressions?

We’re excited about integrating these tools into the creative process, to enhance and streamline—not to replace. Human brains are where new, exciting, unexpected and eye-opening ideas and voices come in. AI can help us see what’s already out there and kickstart our iterative abilities.

Used right, chatbots might make creative work—and the way we do it—better and more fluid. And if these first few months of the AI revolution are any indication, we’re in for head-spinning growth.

If you need a partner to help shape content marketing ideas into sharp, brand-inspired finished products that connect and inspire, our team can help. Contact Snapshot here.